#2 Causality: A Quest for Learning

Overview of Causality

Dear reader,

Welcome to the ML Diaries.

Causality has been Mathematized - Judea Pearl

This article is to explore the idea of causality from “the book of Why” written by well know computer scientists and philosopher Judea Pearl who was awarded with Turing Award for his noble work on causation theory in artificial intelligence.

TOC:

What is Causation?

Causation and correlation

Pearl’s ladder of causation

The do-operator

Interventions, Counterfactuals

Representation

Causal graph

Structural Causal Model: Tool for causal inference

Things to address

Causal representation learning

Spurious relationship due to cofounding

Concluding remarks

References

What is Causation?

First let’s understand what is Causation?

The study of causality has a long lasting history from the time of philosophers Aristotle (384–322 BC) and David Hume (1711–1776) addressing philosophical questions about causation like “what it means for something to be a cause”. Sewall Wright (1889–1988), Donald Rubin (1943) and Judea Pearl’s (1936) work on causality gave us the significant direction.

Pearl’s book is the most valuable asset to consider for understanding causation by asking the question “WHY?”[1].

Our brain has the gift of cognition and that would be the most powerful thing we can ever had. Our brain has the institution to understand the cause and effect relationship. This cause and effect relationship can be defined in mathematical term using the calculus of causation which consists of two languages:

causal diagrams: to express what we know

symbolic language(resembling algebra): to express what we want to know

The relationship between cause and effect, where one event (the cause) brings about or influences another event (the effect).

(For example), Imagine you are playing with a toy car and you push it across the floor. The cause of the toy car moving is you pushing it and effect is the toy car moving across the floor. Without the cause (you pushing the car), the effect (the car moving) would not have happened.

Causation in machine learning can be considered as a quest for learning and understanding things from human perspective.

In many cases we can emulate human retrospective thinking with an algorithm that takes what we know about the observed world and produces an answer about the counterfactual world.

But some criticized that modern machine learning is a very powerful correlation-pattern recognition system that works well on large data from the same distribution that it was trained on, but it is incredibly vulnerable to distribution changes(real-time inference). At present, causal inference methods have mostly been developed for low-dimensional and relatively simple-structured data and are mostly focused on identifying causal relationships, or assuming them in a causal model and quantifying their effects (causal effect estimation). [6]

For us to teach the causality to machine, cognition science and psychology are important and there’s many experimental research are going on for this, which hopefully gave us the new direction for understanding and learning causality.

Causation and Correlation

We all know this famous quote, “Correlation is not causation”.

Does causation is more important or the correlation?

Correlation measures the strength and direction of a relationship between two or more variables indicating that two variables change together while causation indicates a cause and effect relationship, where a change in one variable directly leads to a change in another.

Causation is required to understand the questions we have but it has yet to discover many things for practical implementation while morden algorithms are highly depends on correlation.

A contradictive view by the Chris Anderson the author of Long Tail argued that we can dispense the causation with enough big data in this age of data deluge.

This is a world where massive amounts of data and applied mathematics replace every other tool that might be brought to bear. Out with every theory of human behavior, from linguistics to sociology. Forget taxonomy, ontology, and psychology. Who knows why people do what they do? The point is they do it, and we can track and measure it with unprecedented fidelity. With enough data, the numbers speak for themselves….

Correlation supersedes causation, and science can advance even without coherent models, unified theories, or really any mechanistic explanation at all.

- Chris Anderson

Anderson suggests[3] that correlations which can be easily computed from huge quantities of data, are more important and valuable than attempts to develop explanatory frameworks. It is true that correlations can be valuable to obtain the predictions as they are not simply due to chance. But what they cannot do is tell us what will happen if we intervene to change something. For this, we need to know a causal relationship.

In my opinion,

Causation and correlation are connected and can be used synchronously as both are valuable for recognising the relationships and reasons to answer the questions we have - One is not superior to other.

The well know butterfly effect is the result of change in small thing leads to drastically different outcome over the time in future so there’s high chance that the causation can be related to the series of small changes from past which might be correlated.

Pearl’s Ladder of Causation

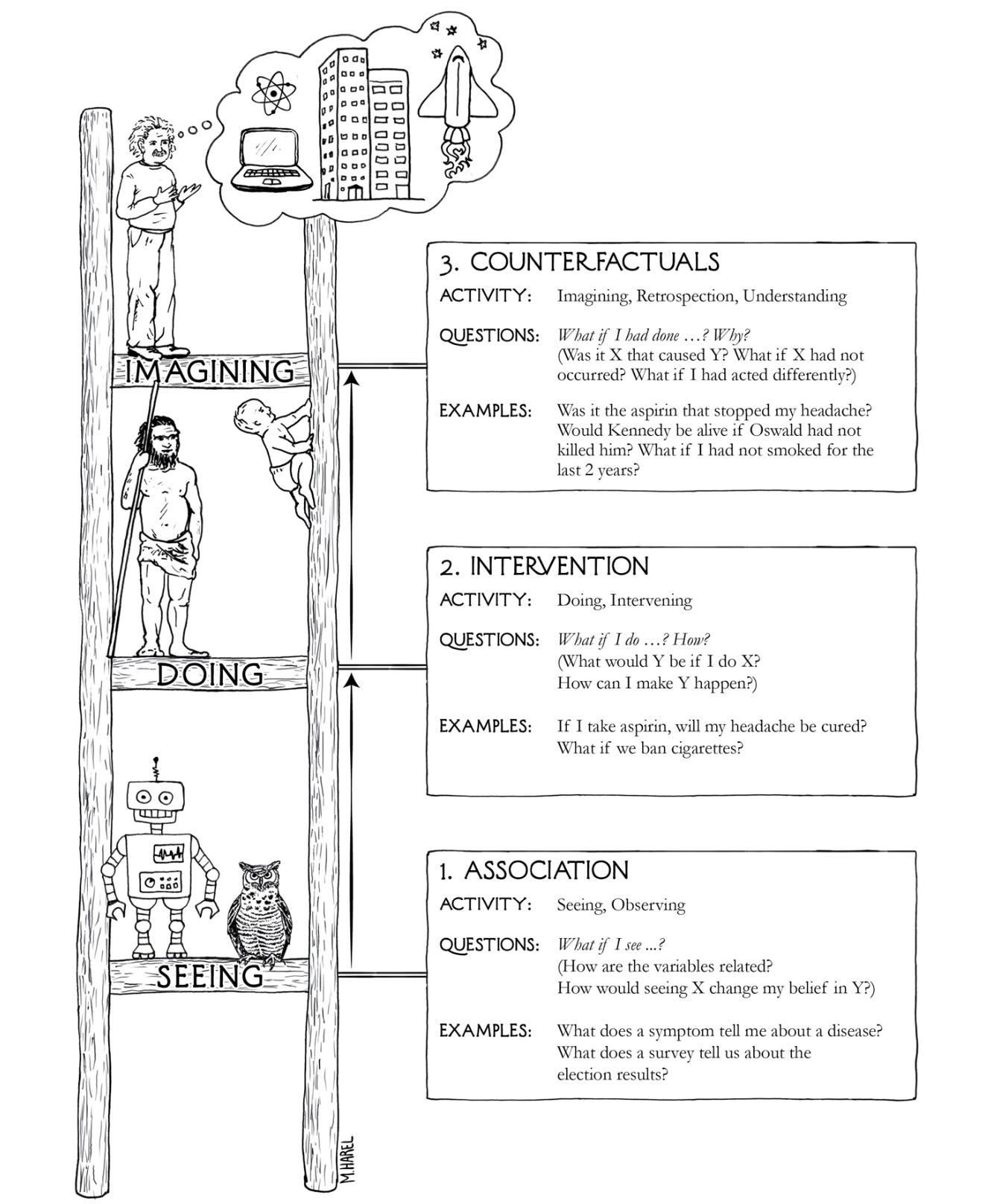

(Source: The book of Why, Perarl’s Ladder of Causation, fig. 1.2, p. 34)

— Associational: Seeing, Observing

p(y | x): How would seeing x change my belief in Y?

Eg: What does a symptom tell us about the disease?

Entails detection of regularities in our environment and is shared by many animals as well as early humans before the Cognitive Revolution

Calls for predictions based on passive observations

— Interventional: Doing, Intervening

p(y | do(x), z): What happens to Y if I do x?

Eg: What if I take aspirin, will my headache be cured?

Entails predicting the effect(s) of deliberate alterations of the environment and choosing among these alterations to produce a desired outcome

This already calls for a new kind of knowledge absent from the data

We cannot answer questions about interventions with passively collected data and for to get the answers is to experiment with it under carefully controlled conditions

— Counterfactual: Imagining, Retrospecting, Understanding

p(yx′ | x, y): Was it x that caused Y?

Eg: Was it the aspirin that stopped my headache?

Can imagine worlds that do not exist and infer reasons for observed phenomena

Counterfactuals cannot be defined or observed with data as they are derived from facts, while counterfactuals are imaginary & retrospective.

The do operator

It represent interventions or manipulations of a system.

Denoted as do(X = x), represents an intervention that sets the value of a variable X to x. This intervention is different from simply observing or conditioning on X = x.

Intervention: The do-operator represents an active intervention that changes the value of X, rather than just observing or passively recording its value.

Causal effect: The do-operator is used to estimate the causal effect of an intervention on an outcome variable.

Graphical representation: The do-operator can be represented graphically using causal graphs, which help to visualize the causal relationships between variables.

Example:

Suppose we want to estimate the causal effect of a treatment X on an outcome Y. Using the do-operator, we can represent the intervention as do(X = 1) for treated individuals and do(X = 0) for control individuals. The causal effect can then be estimated by comparing the outcomes under these two interventions.

— Interventions

An intervention is a deliberate action taken to modify or control a variable of interest in order to observe its effect on an outcome, which we denote by the do-operator.

Example:

Say we are interested in estimating p(s | do(d)). The difference in its meaning is that p(s | do(d)) denotes the distribution of S intervened upon the value of D, i.e. if we set it to d. This means that we are not considering a certain sub-population for which we observe D = d, but we reason about what happens to the total population after taking the action do(d).

— Counterfactual

“If we had turned the sprinkler off, the slipperiness would have been low.”

Counterfactual are your hypothesis - an “if” statement in which the “if” portion is unrealized.

Counterfactual reasoning, which deals with what-ifs are the building blocks of moral behaviour as well as scientific thought. The ability to reflect on one’s past actions and envision alternative scenarios is the basis of free will and social responsibility.

The algorithmization of counterfactuals invites thinking machines to benefit from this ability and participate in this uniquely human way of thinking about the world[1].

Counterfactuals get to the heart of what makes causation so perplexing. We can only observe what actually happened, not what might or could have happened. An evaluation of a causal effect is thus not possible without making assumptions or incorporating information external to the connection in question. One way to do this is by using a substitute for the unobservable counterfactual.

In my opinion, counterfactuals can be considered as the free will of thinking “What-if”, which leads to enormous possibilities to explore the things.

Example:

Consider a medical treatment where p(y | x, do(t)) describes as the scenario in which all patients with medical history features x take treatment t. Perhaps the most obvious counterfactual quantity we can think of in this scenario is p(yt′ | x, t, y). How do p(y | x, do(t′)) and p(yt′ | x, t, y) semantically differ? The latter can be interpreted as imagining the former “after the fact” that x, t, y occurred.

So instead of asking “what happens if we give treatment t to patient x?”, the latter is retrospective, asking “what would have happened if we had given treatment t′ to patient x instead of t?”.

Causal Diagram

The causal diagrams are simply dot and arrow pictures that summarize our existing scientific knowledge. The dots represent quantities of interest called “variables” and the arrows represent known or suspected causal relationships between those variables namely, which variable “listens” to which others.

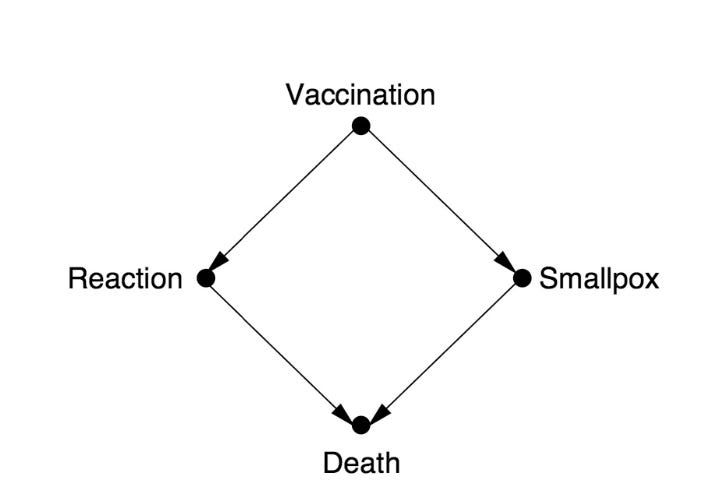

Ex: Causal diagram for the vaccination example. Is vaccination beneficial or harmful?

(Source: The book of Why, Causal diagram for the vaccination example, fig. 1.7, p.51)

A causal model entails more than merely drawing arrows. Behind the arrows, there are probabilities. Arrow from X to Y implying probability rule specifies how Y would change if X were to change. It is important to note that every edge in a causal diagrams indicates a direct and no indirect causal effect or correlation through other nodes of the graph. This not only makes our graphs less complex, but also enables us to model the effect of interventions. Causal diagram enables us to estimate simple, complicated, deterministic, probabilistic, linear and non linear causal and counterfactual relationships.

Causal diagram can be much more robust and powerful tool to convey causality. The benefits of causal diagram is that it still hold the same robustness and remain valid for same estimand, which can be applied to new data and help to produce the new estimate for query we want to answer.

Structural Causal Model: Tool for causal inference

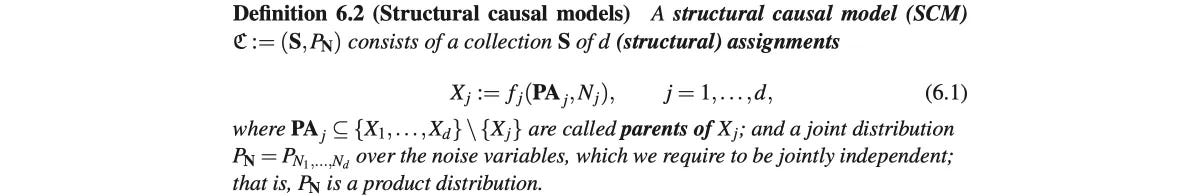

The Causal Diagram, a directed acyclic graphs that can be depict causal relations in a binary way only. Either there is a cause-effect relation between two variables, in which case there is a directed edge from cause to effect in the graph, or there is none, and therefore there is also no edge.

SCM[5] also known as Structural Equation Model that can be used to express causal relationships through deterministic, functional equations. This formalism reflects Laplace’s conception of natural laws being deterministic and randomness being a purely epistemic notion. SCM is helpful for describing the characteristics of the discovered causal relationships

In SCM, all variables will have their own equation, describing the functional mechanism that explains how a variable’s parents influence the variable itself. But SCMs include not only the influence of the parents as we want the causal relations to be described in a probabilistic manner meaning instead of saying that, every time when X changes by 1 unit, Y changes by 5 units, we acknowledge that there will be some random noise N that could alter the actual effect one way or another.

A formal definition is provided in the by Peters et al.(2017) from the book Elements of Causal Inference[7].

(source: Peters, Elements of causal inference: foundations and learning algorithms, Definition of Structural causal model)

Casual Representation Learning

Causal Representation Learning aims to discover hidden causal variables and their relationships from observed data, enabling more robust and generalize AI models. It focuses on understanding the underlying causal structure, rather than just correlations which helps for better predictions, interventions and explanations of phenomena.

Traditional machine learning models primarily focus on prediction, often relying on correlations within the data. Causal representation learning goes a step further by trying to uncover the causal mechanisms that generate the data. It seeks to identify the underlying causal variables and their relationships, even if these variables are not directly observed latent variables.

Spurious Relationships due to Confounding

Spurious relationship is a mathematical relationship in which two or more events or variables are associated but not causally related, due to either coincidence or the presence of a certain third, unseen factor known as "confounding factor" or "lurking variable”.

Eexample of a spurious relationship can be seen by examining a city's ice cream sales. The sales might be highest when the rate of drownings in city swimming pools is highest. To allege that ice cream sales cause drowning or vice versa, would be to imply a spurious relationship between the two. In reality, a heat wave may have caused both. The heat wave is an example of a hidden or unseen variable, also known as a confounding variable.

These things should be addressed as it might be helpful to understand the reasons why model failed at real time inference.

Concluding remarks

This article is the overview of understanding the basics of causality, tools to represent causality and things to consider for AI models. Causality will help us to understand not just data, algo, relationships or model’s reasoning but also to discover why and how things are working. In this era of intelligence, causality is what we are trying to achieve to mimic the human behaviour.

References

J. Pearl and D. Mackenzie, The Book of Why: The New Science of Cause and Effect. 2018. [Online]. Available: https://cds.cern.ch/record/2633782

“Causal Machine learning.” https://arxiv.org/pdf/2206.15475

The New Atlantis, “Correlation, causation, and confusion,” The New Atlantis. https://www.thenewatlantis.com/publications/correlation-causation-and-confusion

Wikipedia contributors, “Causality,” Wikipedia, Jun. 25, 2025. https://en.wikipedia.org/wiki/Causality

J. Runge, “Structural causal models — a quick introduction - Causality in data science - Medium,” Medium, Aug. 30, 2023. [Online]. Available: https://medium.com/causality-in-data-science/structural-causal-models-a-quick-introduction-1ab49259e921

K. Styppa, “What is Causal Machine Learning? - Causality in Data Science - Medium,” Medium, Aug. 21, 2023. [Online]. Available: https://medium.com/causality-in-data-science/what-is-causal-machine-learning-ceb480fd2902

J. Peters, D. Janzing, and B. Schölkopf, Elements of causal inference: foundations and learning algorithms. 2017. [Online]. Available: https://library.oapen.org/bitstream/20.500.12657/26040/1/11283.pdf

Thanks for reading ML Diaries, You Are Awesome!